Website analytics are great at telling you where there is a problem on your site.

However, analytics won’t tell you why it is a problem or how you could fix it. This is where you need to talk to your users and do some qualitative user research. Website metrics should also be interpreted with caution…

What analytics can (and can’t) tell you

There are a lot of different metrics you can consider when reviewing your analytics. To make things easier, we find it useful to think about the following different categories:

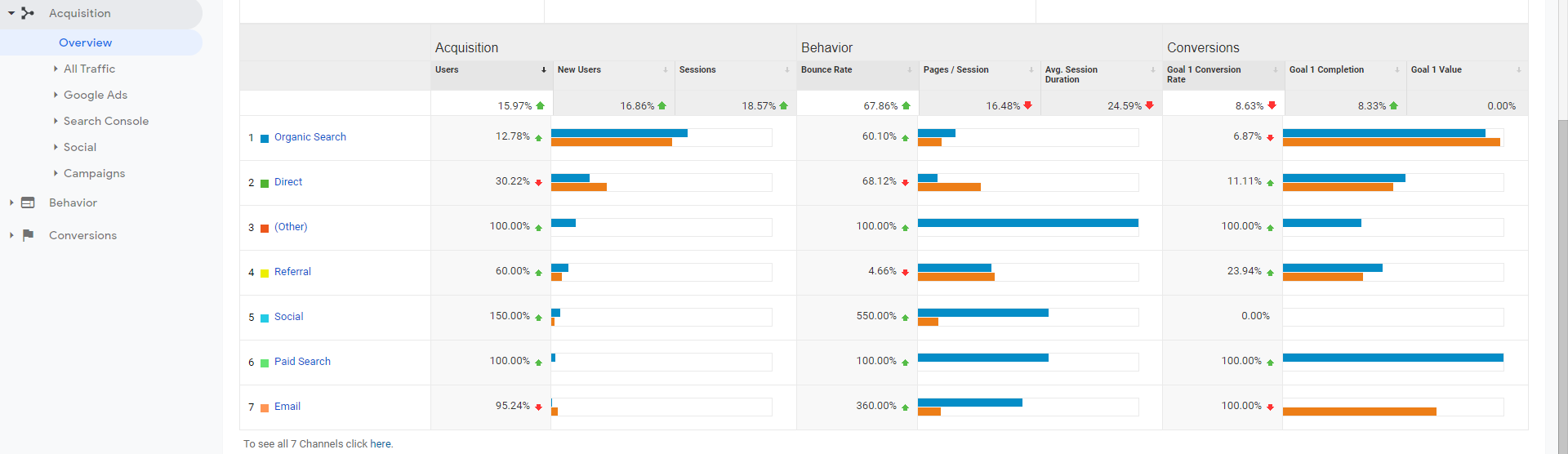

- Acquisition metrics: How your users arrive at your website e.g. number of sessions, percentage new sessions, new users, traffic sources, social network referrals

- On-site behavioural metrics: What they do once they are on the site e.g. bounce rates, pages per session, average session duration, landing pages, exit pages, events on the page

- Conversion metrics: Do they take action e.g. goal completions, goal conversion rates, cost per conversion

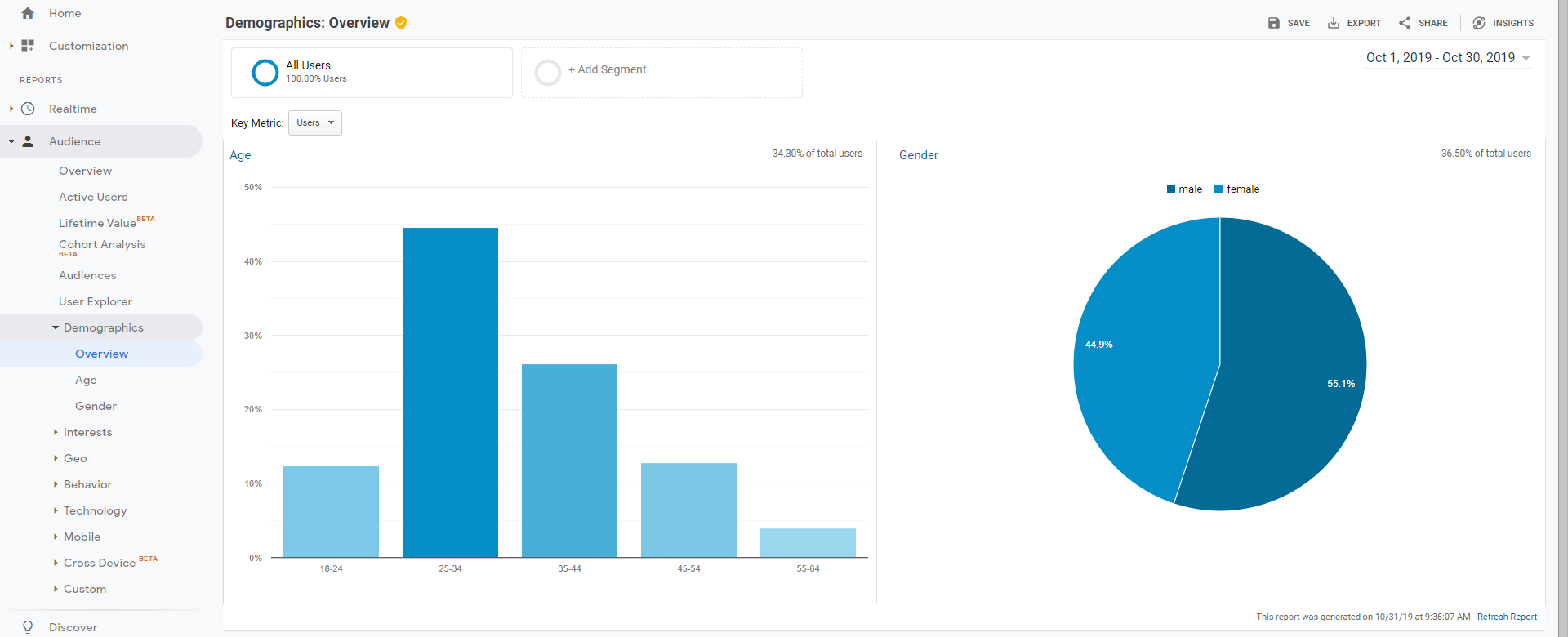

- Overarching contextual metrics: Who are your users? E.g. demographics (location, age, gender), devices used, frequency and recency of visits

Acquisition metrics

Identifying how users arrive at your site is fairly straightforward. Source and session data are abundant on all analytics platforms. This can provide useful insight into the types of sources you may want to develop and nurture.

However, accurate acquisition data is reliant on cookie placement on a user’s browser so is not 100% reliable. For example, a user may access the same website through multiple browsers or via different devices. In this case, each visit will be shown as a unique one, rather than a repeat visit. Rather than considering absolute values, take a look at trends in these metrics overtime.

Behavioural metrics

Behavioural metrics are slightly more complex. Using analytics to determine exactly what your users are doing on your website can only reveal so much and is wide open for misinterpretation. Here are some of the most common issues:

- Bounce rates – many clients come to us complaining of high bounce rates, like it’s a bad thing. However, this is not always true. As NNg say "Not All Bounces Are Bad". For example:

- If a content page shows a high bounce rate, it may actually mean the user quickly and easily found the information they needed and left with a positive experience of your site.

- On the other hand, it may be that the user navigated to this page because a link gave off strong scent for their task. On arrival, however, they quickly realised this wasn’t the right page for them and left, meaning the site’s information architecture and navigation is a problem.

- Average session duration – The longer a person spends on a page the more engaged they are, right? Not necessarily! We have watched hours of usability testing where testers spend ages on a page trying to find the information they want and failing. A high average session duration could suggest the layout of your pages needs reviewing as users can’t find the information they need.

- Pages per session – a common interpretation is that viewing lots of pages per session indicates engagement. Again, we would disagree. This can also indicate that the user is roaming round the site trying to find the information they want and failing. Again, this may indicate issues with the content itself or with the information architecture, navigation and labelling.

Conversion metrics

Conversion metrics tell you if your users are taking action on your website. If configured correctly, goal completions and goal conversion rates can be useful metrics to assess the success of your website and highlight where there may be problems. However, answering why conversion rates are low may be beyond what analytics alone can do.

Overarching contextual metrics

Identifying who your users are and how regularly they are interacting with your website can be another tricky question to answer from analytics alone. For example, demographic data is generated by reviewing a user’s browsing history and matching this to known behaviours for different age and gender categories. This data doesn’t take into consideration that multiple people, including children, may be using the same machine for browsing and often only accounts for a portion of total visitors (on our site it only looks at 36% of total users).

How qualitative user research can help

By combining an audit of your website analytics with user research you can get a much fuller understanding of how your site is performing for your users. Not only can you identify where problems lie, you can also answer why they exist and how you can fix them as well.

If the analytics are flagging up problem areas, undertaking some user research or usability testing to uncover the problem is an obvious next step. For example:

- If your analytics show that conversions tend to happen after several visits to the site but it is not clear why, user research will help reveal what you need to do to improve those conversion rates. As NN/g say “perhaps it takes so many visits to convert because users have difficulty comparing our offerings to those of competitors, or because they believe it will take too long to sign up and get started?”

- If your behavioural metrics suggest users are highly engaged on with your site (long session times, multiple pages per sessions) but they are not taking action, a round of qualitative usability testing may just reveal that what presented as engagement is actually confusion as users struggle to navigate your site or make heads or tails of your content pages.

A holistic approach of analytics + user research will allow you to identify where problems exist on your website, why they are a problem and how you can fix it. Even if the analytics don’t sound obvious alarm bells it is still worth doing a qualitative research ‘dip’ from time to time to make sure there are no unexpected nasties lurking!

Read more: How to do a UX website content audit, Writing content for the web: UX best practices